Documenting Gesture Designs using Natural Language Generation (2019–present)

We have begin developing a natural language generation (NLG) module to automatically generate documentation of gesture definitions created by visualization designers. The main goal of this work is to help gesture developers better understand gestures by providing a text-based summary to accompany the declarative code in the four expressions that define each gesture in the gesture architecture. This text-based summary will assist gesture developers how event patterns map into the visual appearance and data editing behavior of each gesture.

Natural Language Generation is a well explored area of research. We reviewed the NLG literature and identified two major NLG approaches as candidates for development. The most widely used approach is the statistics-based approach. Due to the small size of our dataset (for the relatively small number of gestures expected even for some future, mature, diverse library), we decided this approach would not be suitable. We opted instead for a template-based approach. This approach allows us to directly map our inputs into outputs via a set of rules. Mapped outputs can be partially complete, offering the flexibility to fill in gaps at a later stage prior to presentation to the visualization designer in a gesture-design user interface. Importantly, this approach is compatible with the live editing of expressions that happen in the builder user interface used by visualization designers in Improvise.

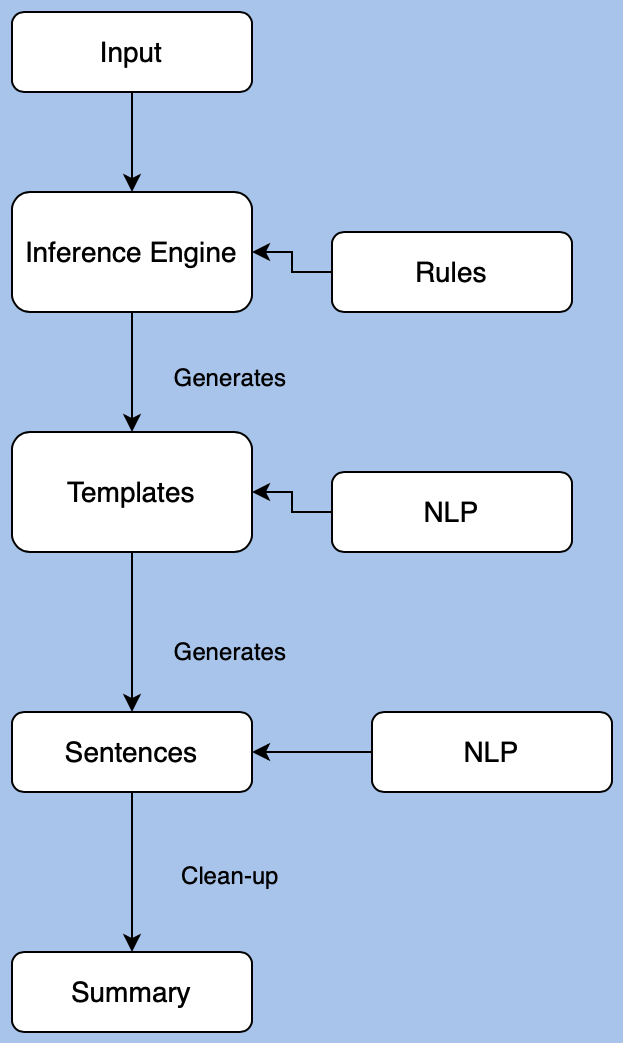

Our first-cut gesture documenter framework has four stages (inference, template, sentence, summary). As shown below, the underlying NLG system will be designed to progress through each of the levels one by one, take the information from each stage, and compose them to construct a final summary that is grammatically correct and readily understandable. The inference engine generates templates based on the given rules and the input. The templates are later filled in using data provided in the input. Once a cycle is done, we take all the obtained sentences and combine them into a summary. Finally, we apply common natural language processing (NLP) techniques to clean up the summary for presentation.

This process entails a set of rules tailored for each of the four stages. We are working on developing the rule-set stage by stage. At present our rule set is a small one, focusing on achieving initial, readable but still crude, language constructs in all four stages. Here are some example rules:

- Rule 1 ← (input) ::= (action) | (events) | (geometries) | (function)

- Rule 2 ← (events) ::= (P) | (R) | (C)| (D)

- Rule 3 ← (geometries) ::= (oval) | (polyline) | (line) | (cone)

- Rule 4 ← (action) ::= (selected) | (contains) | (location)

- Rule 5 ← (function) ::= (glyph) | (geom) | (data) | (view)

Here are some example templates:

- (selected) ::= The selection operation is performed.

- (selected) ::= The selected value is [ ].

- (P) ::= The mouse event performed is a press.

- (polyline) ::= The geometries involved in the gesture are poly lines.

- (view) ::= The visual feedback is a [ ].

And here are example summaries that arise from them for two gestures from the gesture gallery:

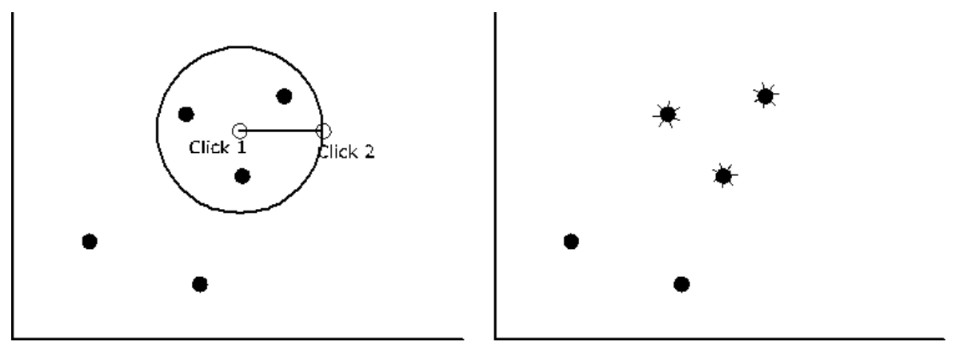

- Circle selection gesture: "The action performed is a mouse press action. This is the beginning of the gesture. This is the next step of the gesture. The action performed is a mouse drag. This is the end of the gesture. The action performed is a mouse release. The geometries involved are points, lines. The visual feedback is circle. The selection operation is performed on the item. The circle contains selected items."

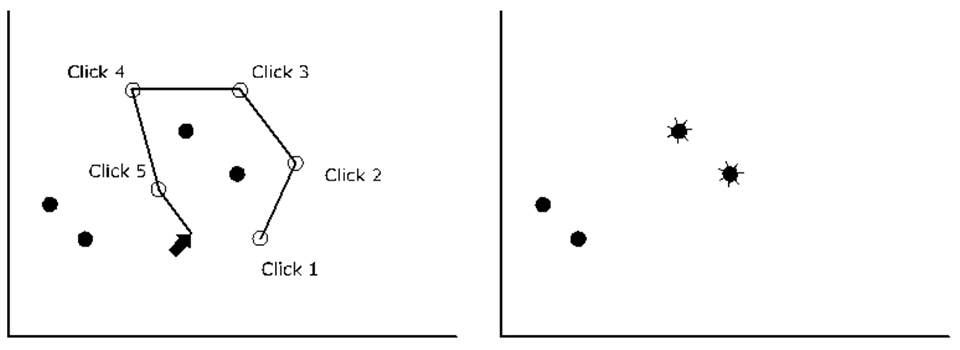

- Lasso selection gesture: "The action performed is a mouse click. This is performed multiple times. The geometries involved are points. The visual feedback is polylines. The selection operation is performed on the item. The polyline contains selected items."

We are early in the process of developing the gesture documenter. The rule sets is small so far. However, we expect the implementation process to be quick and straightforward to modify as the rule sets expand to encompass more and more gesture definition features. Our hope is that, once mature, the gesture documenter will reduce the effort needed by visualization designers to learn and recall gesture definitions, as well as to serve as reference documentation for sharing gesture libraries between designers across visualization projects.