Gesture Gallery (2019–2020)

Development of the gesture gallery began in the Fall 2019 semester and has continued since then. After learning the goals and plan for the project, the students engaged in two concurrent activities: learning about approaches and systems of coordinated multiple view visualization, and developing a gallery of gesture designs.

The gesture architecture is being implemented first as an extension of existing interaction and coordination capabilities in the Improvise visualization environment. As such, the students were trained to design and implement visualizations in Improvise, with a special focus on its coordination features and the basic data editing capabilities in Coordinated Queries, its declarative design language.

The students participated as a group in hands-on workshops then worked individually to design potential gestures for selection, navigation, and annotation. We met frequently as a group to assess the individual designs and brainstorm about applications, improvements, and variations. Most of the gestures were iteratively modified and incrementally refined from their original conception. We developed formalisms to document key aspects of the designs and a simple categorization scheme for organizing them. The students used a cloud platform (iCloud) to collate their designs into a single book-like gallery (a shared Pages document).

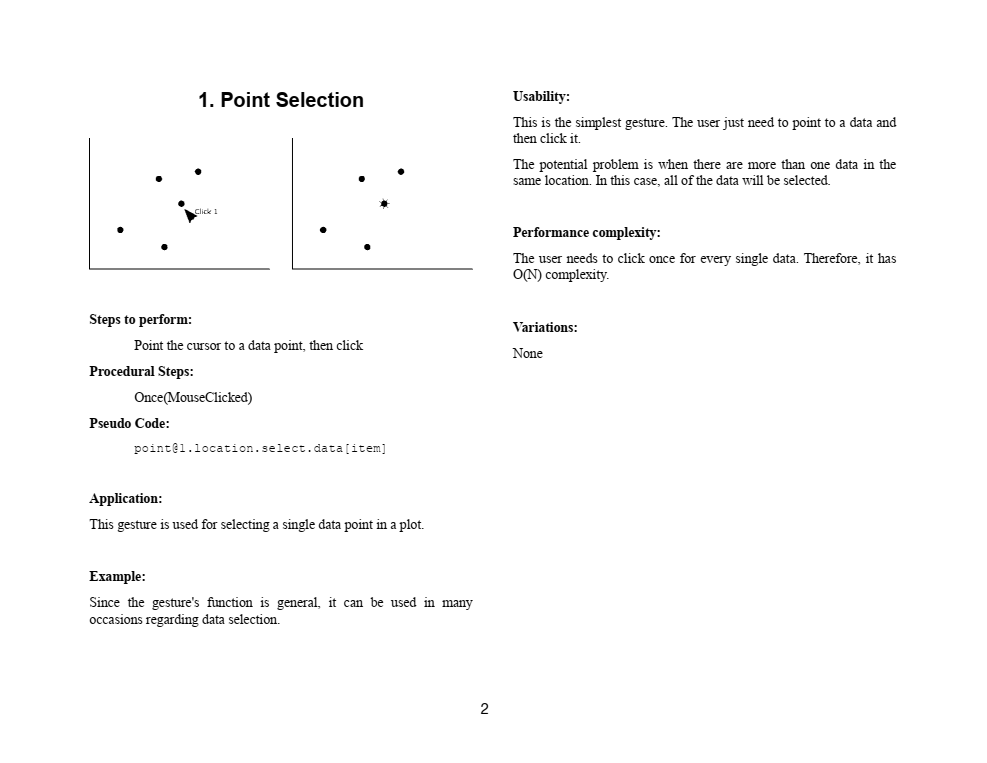

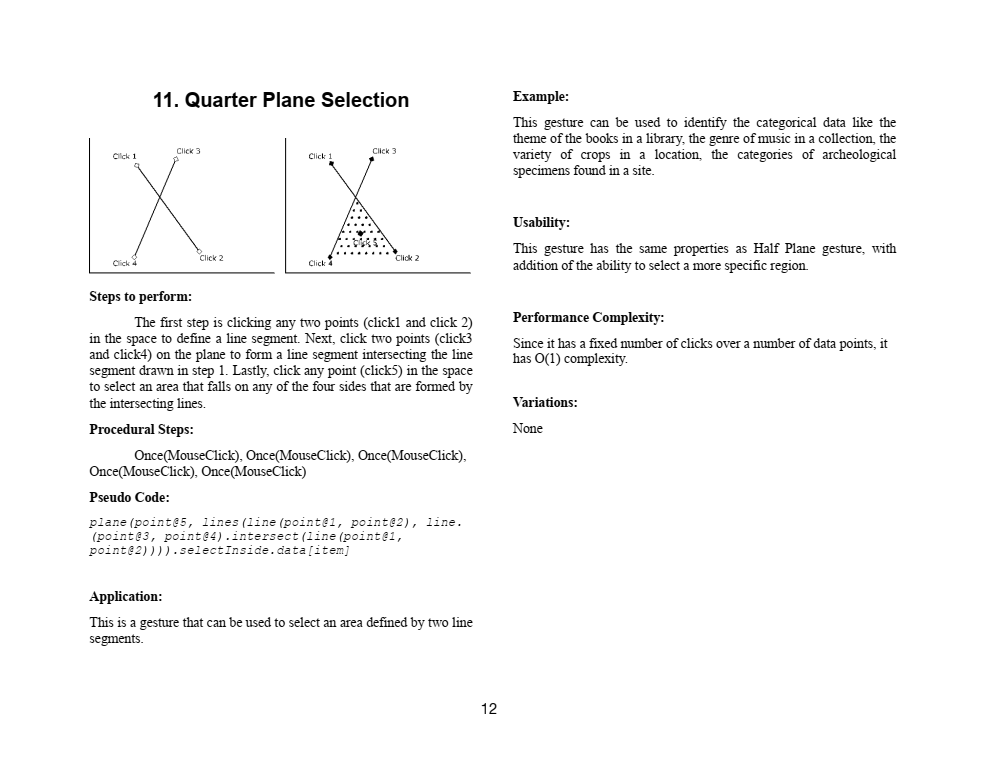

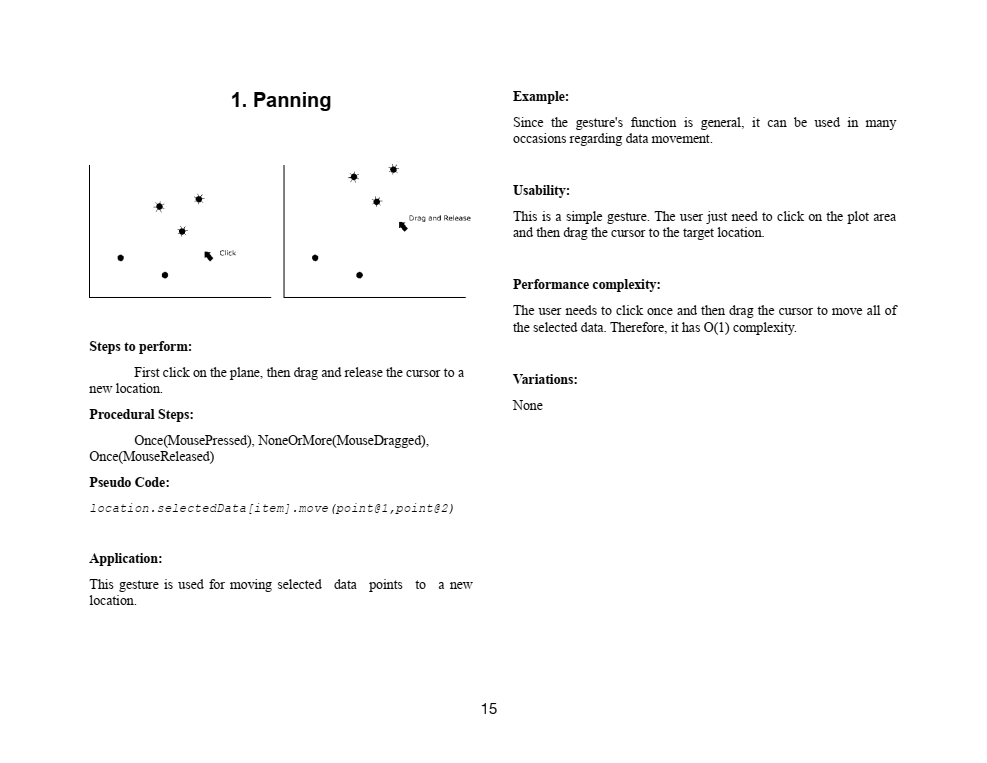

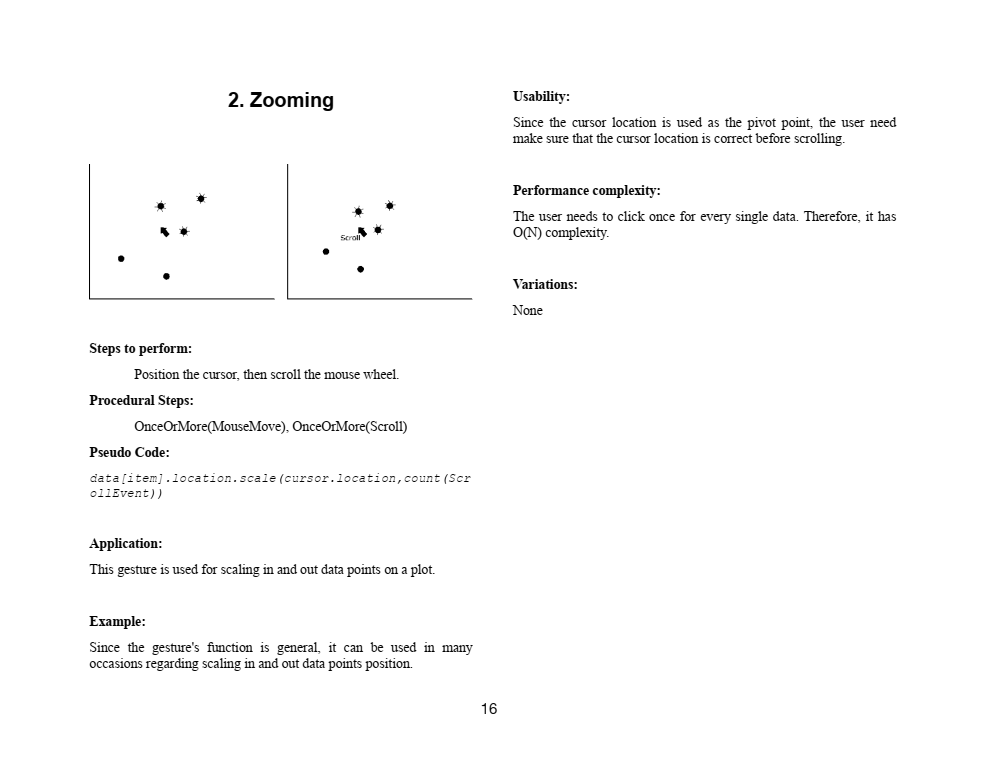

The examples in the gallery follow a common presentation format. The template is divided into several sections:

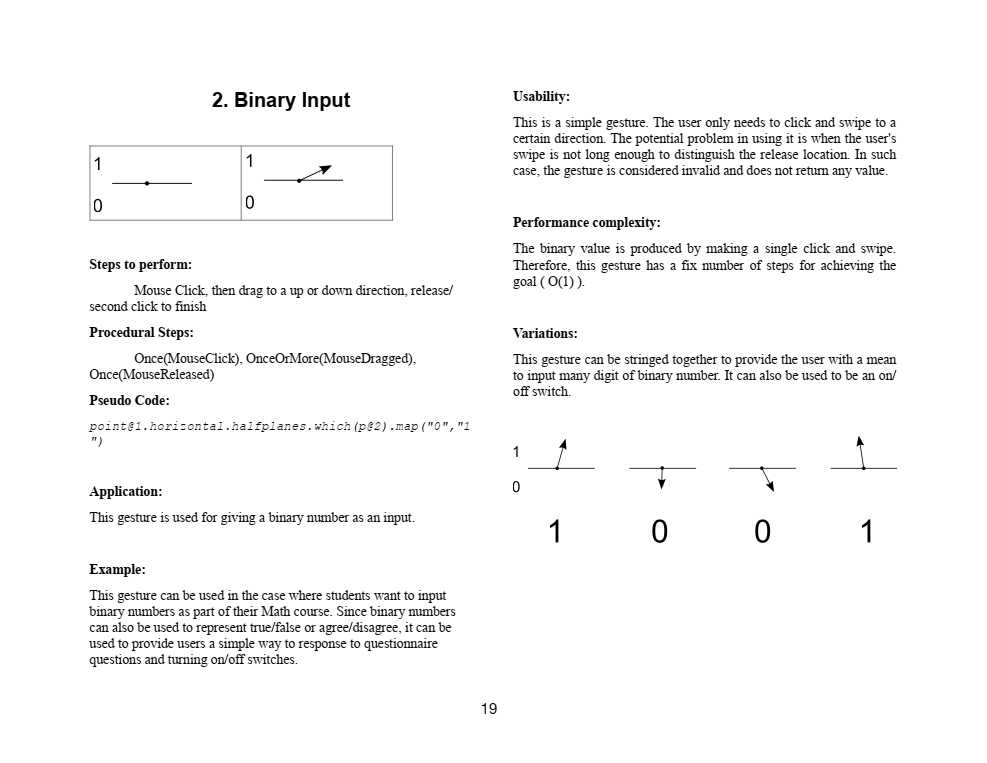

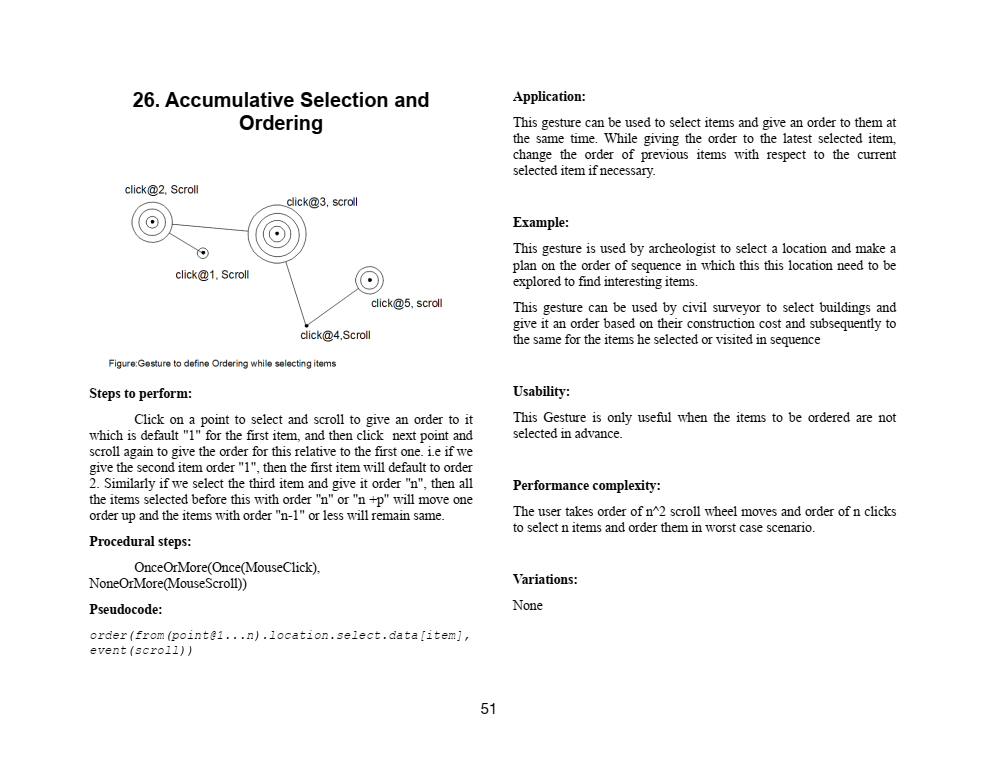

- A depiction of the gesture. The depiction conveys the order of input events needed to perform the procedure and other details regarding the gesture's underlying geometric shape.

- The steps to perform the gesture, described in detail from the user perspective.

- Procedural steps to perform the gesture, with the input pattern used to map input events into gesture matches.

- Pseudocode for the operation triggered by the gesture, conveyed in a suggestive declarative language style. (We use this style because of its straightforwardness and suitability for use with visualization pipelines.)

- General application of the gesture, describing typical use of the gesture in visualization designs.

- An example application of the gesture, outlining one or more specific ways to employ the gesture.

- Thoughts on usability, particularly to speculate on how a user might experience difficulty performing the gesture and how the gesture could behave differently given different input.

- Characterization of interactive performance complexity, a quantitative relationship between the amount of input and the amount of information change achieved, in a manner analogous to computational complexity.

- Anticipated variations, laying out some of the possible alternatives inspired by the gesture.

The gallery is grouped into three types of input operations: selection, navigation, and annotation. There are a total of 45 gesture examples in the whole gallery to date (currently version 4). Selection denotes the action of indicating a set/subset of data. Selection is usually accompanied by other operations to modify the data or the view. This group is the first group in the gallery and includes twelve gestures so far, including both common and exotic examples, such as:

Navigation generally refers to the manipulation of the coordinate system in which data is mapped into in a visualization view, in contrast with manipulation of the data itself. It is used to change a spatial perspective from which the user can observe the data. This group comes second after the selection group. The group has only two examples at present:

Although many more navigation gesture are possible, especially in views with coordinate systems beyond simple 2-D Cartesian, the goal of data editing gestures in this project led us to focus primarily on selection and annotation gestures in our efforts to populate the gallery so far. Annotation here signifies the general making and modification of data value(s). The annotation group in the gallery offers 31 gesture examples varying widely in their utility ranging from general to much more specialized applications; for example:

Creating the gallery of gesture designs provided a practical means to assess the potential versatility of data editing gestures. Each gesture can be studied in terms of its interactions, underlying geometric shape, and graphical properties. The diverse examples strongly suggest that gestures can readily cover both the general interactions common in visualization tools and more exotic forms of interaction suited to specialized applications. Designing the examples in the gallery further served as thought experiments, motivating wide-ranging design variations and examination of ways to organize gestures both conceptually and practically.