Designing Gestures for Data Editing (2015–2017)

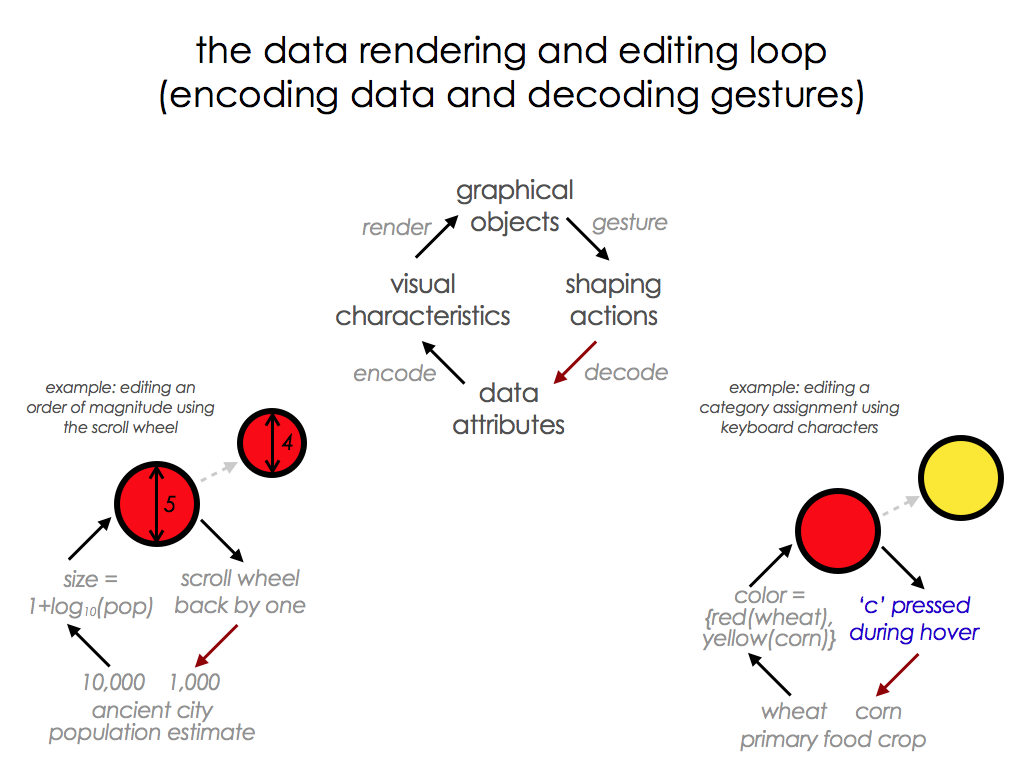

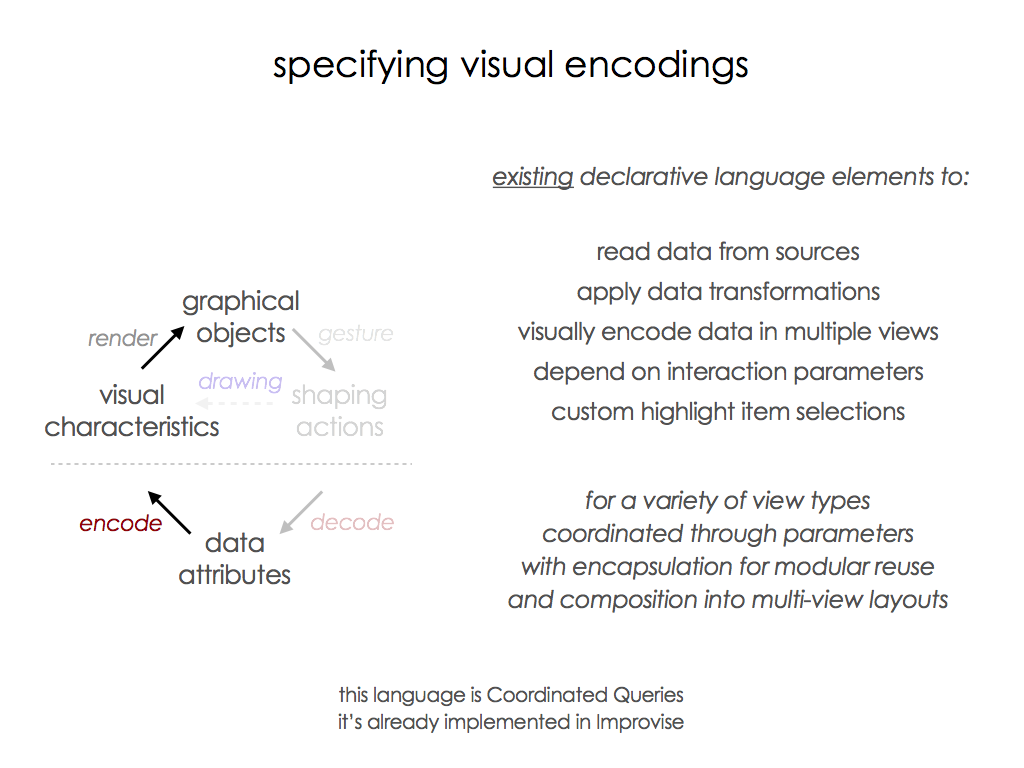

The project is helping to significantly expand the theory of visualization design. The human-computer interaction loop of data visualization can be usefully reinterpreted as drawing/painting/sculpting that is indirectly backed by data rather than directly backed by configurations of physical media. Interactions in visualization can follow abstract rules of computation that are less constrained than the concrete rules of physics. Visualization interaction need not be limited to direct manipulation of space (navigation) or pointing at objects in it (selection). Interaction design in data visualization can be extended beyond navigation and selection in a straightforward way.

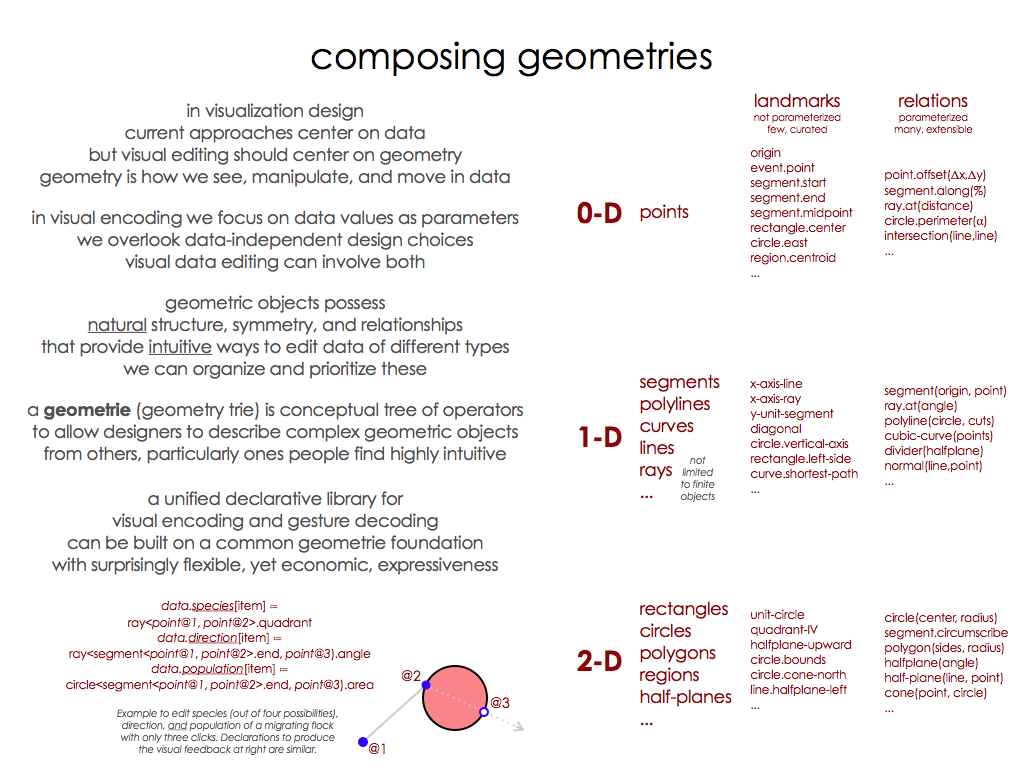

Gestures are movements in a spatial context. They possess geometry and other characteristics. They can affect the geometry and other characteristics of the spatial context, the objects in it, or both. From this perspective, navigation is a special case of interaction that transforms context geometry. Selection is a special case of interaction that indicates object subsets. Of course, numerous other interactions exist in visualization tools, but all (or the vast majority) happen in a halo of user interface beyond the visualization model. Many of these interactions can be captured under the gesture-based data editing model.

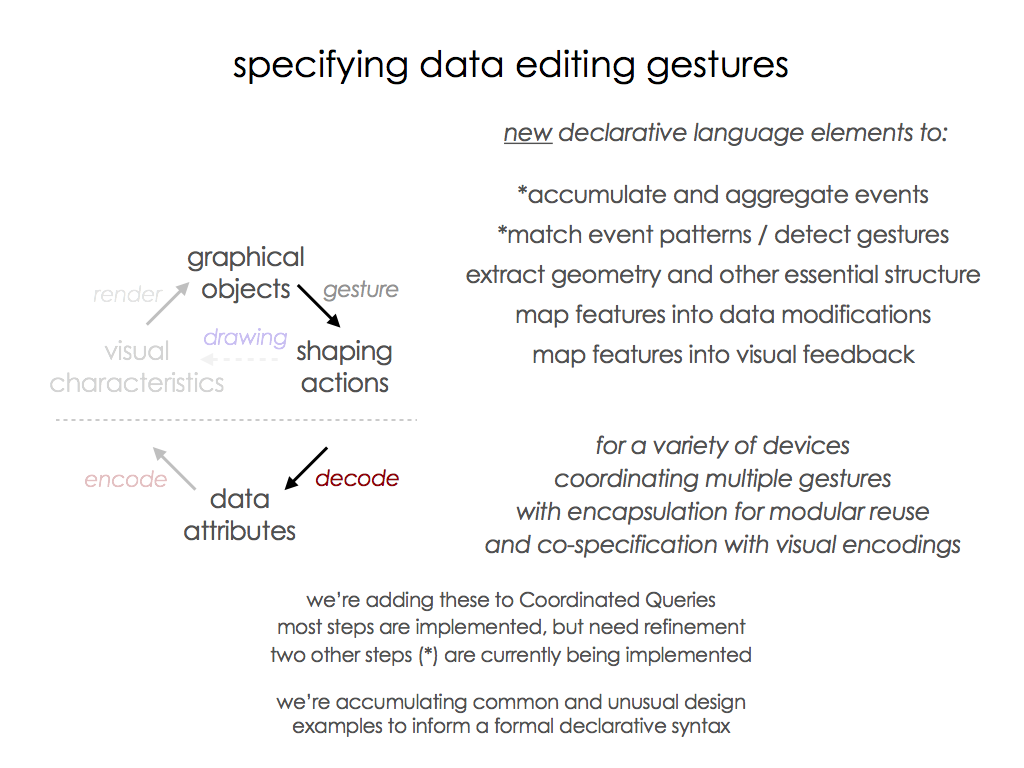

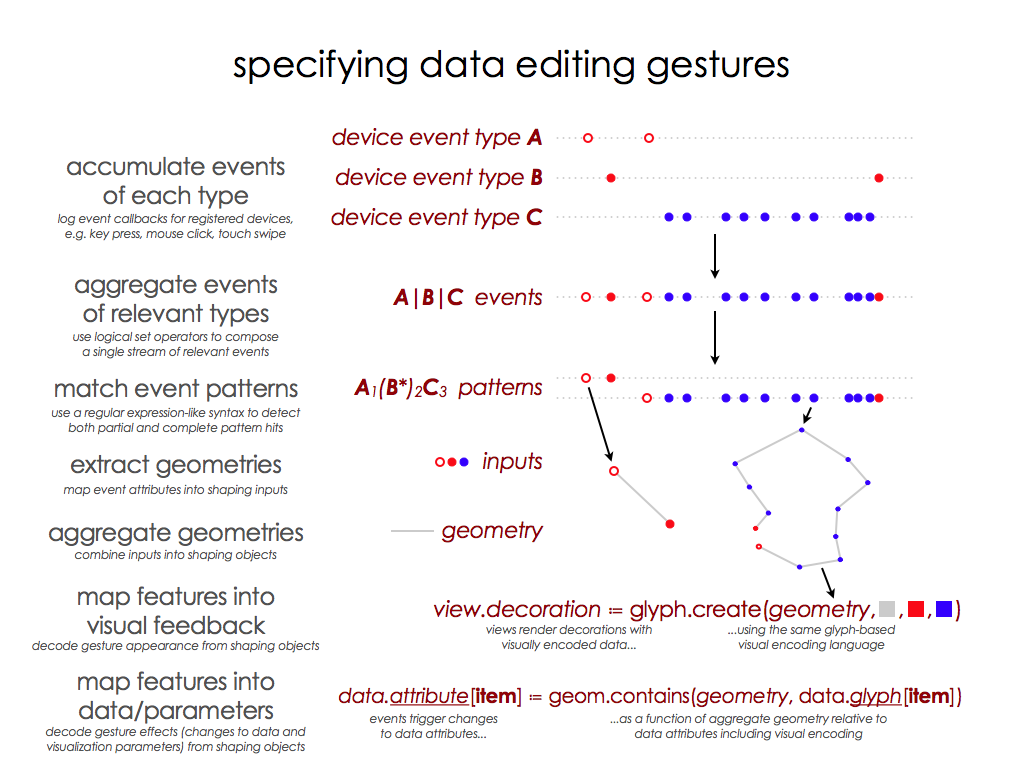

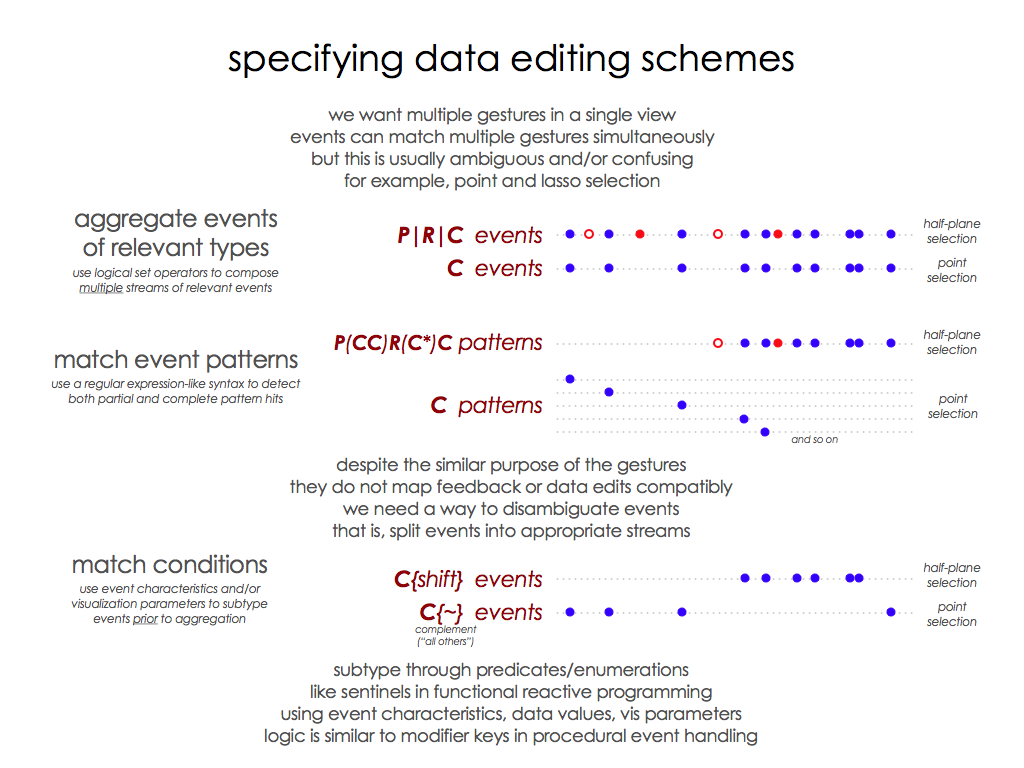

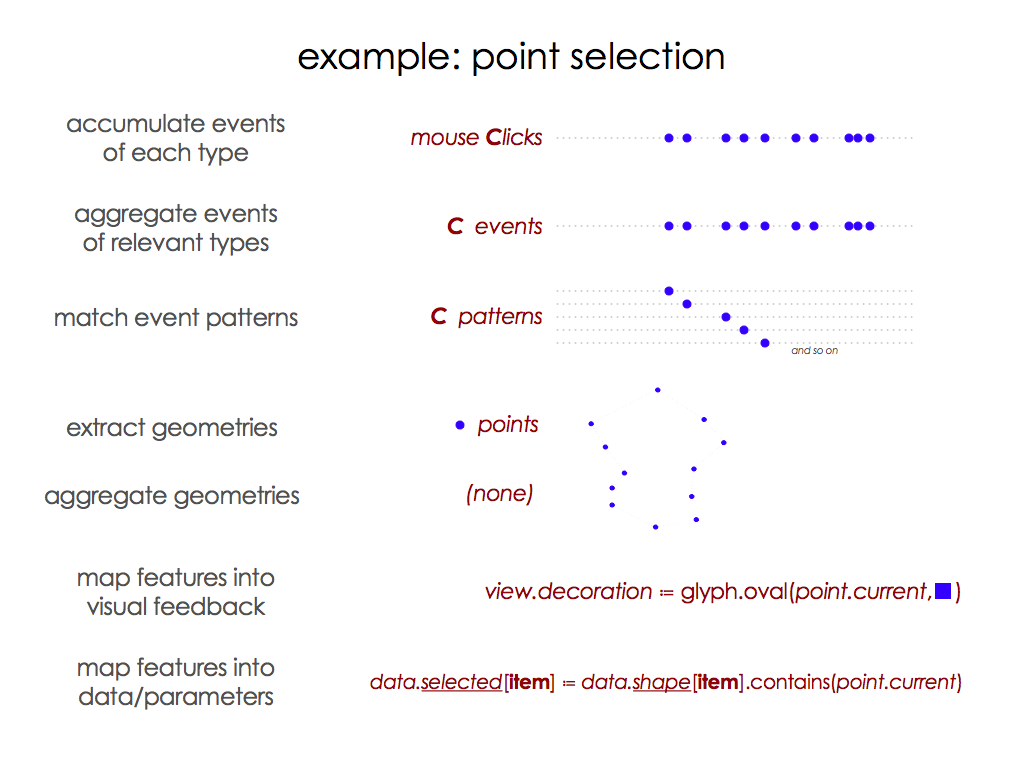

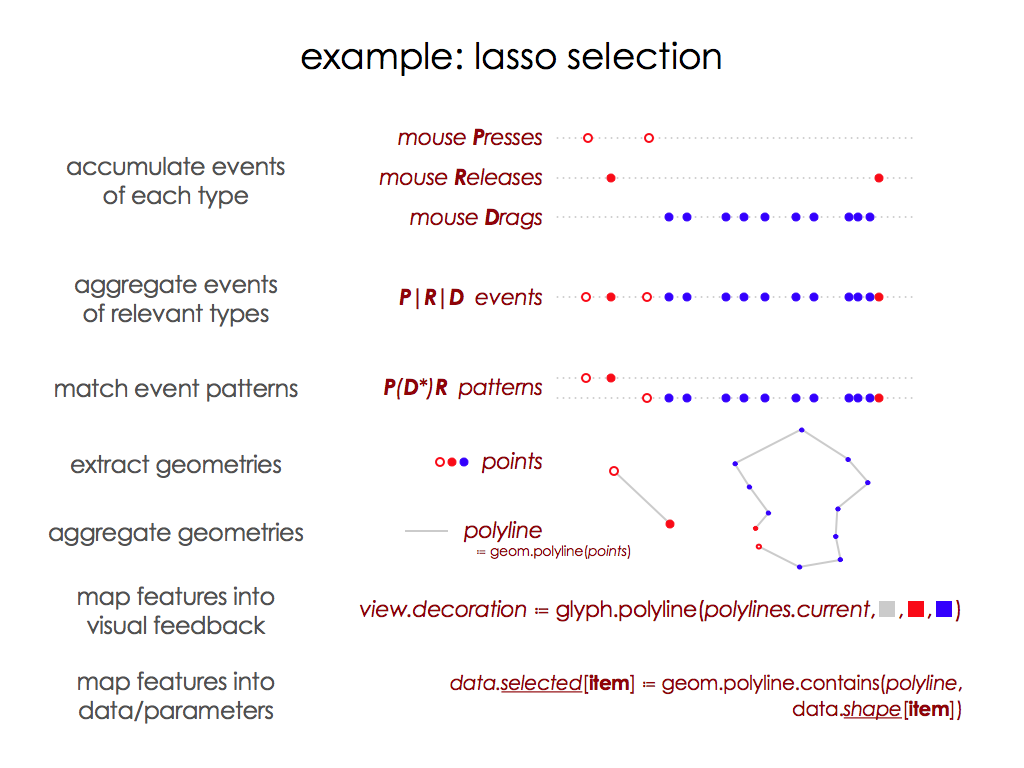

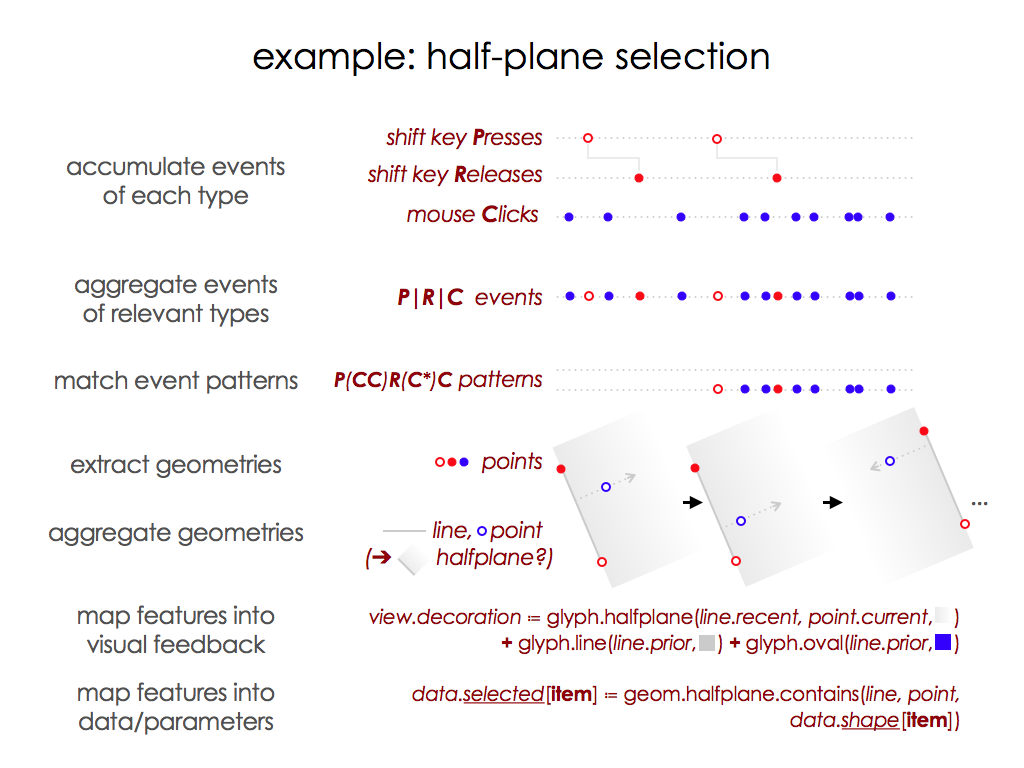

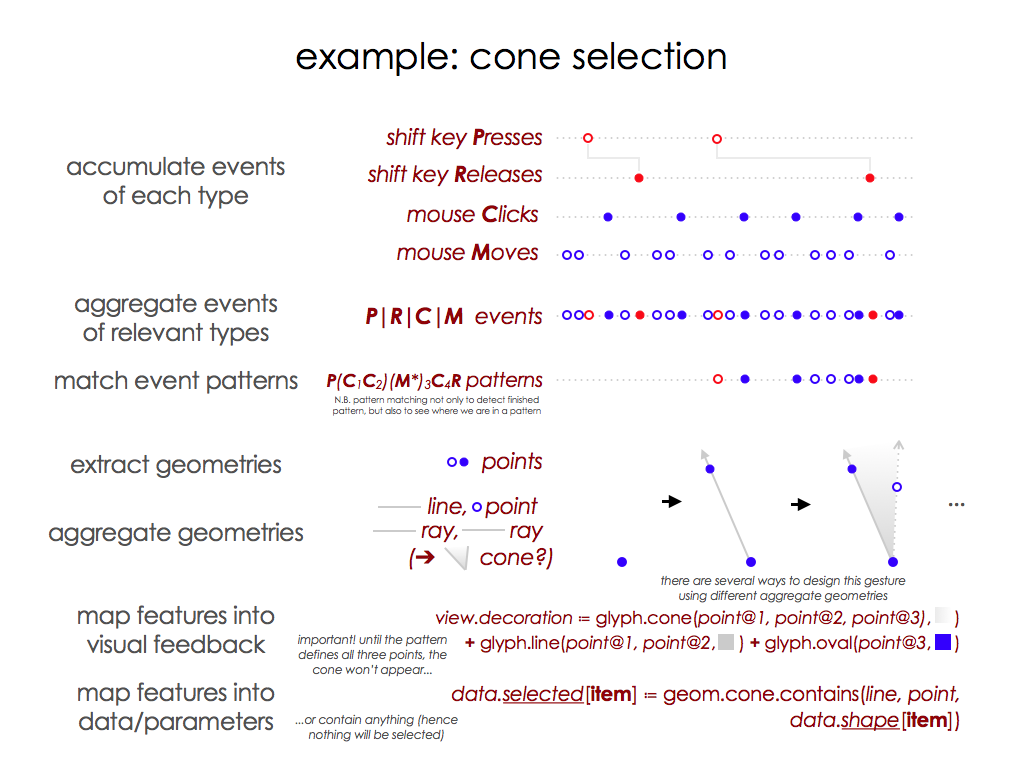

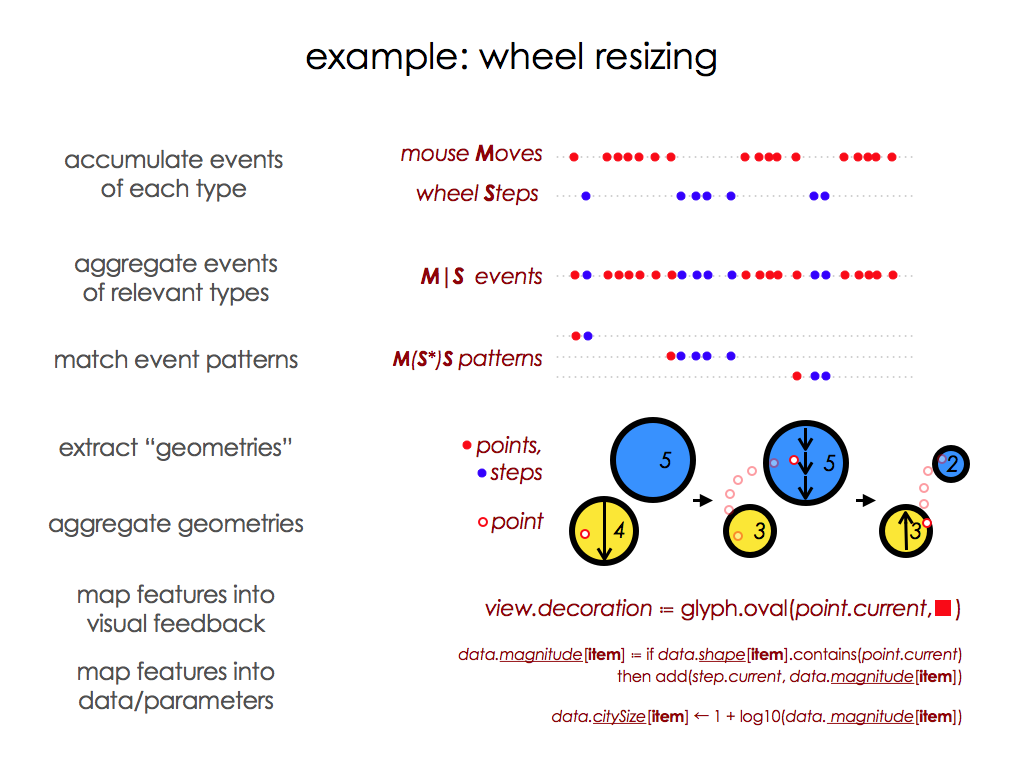

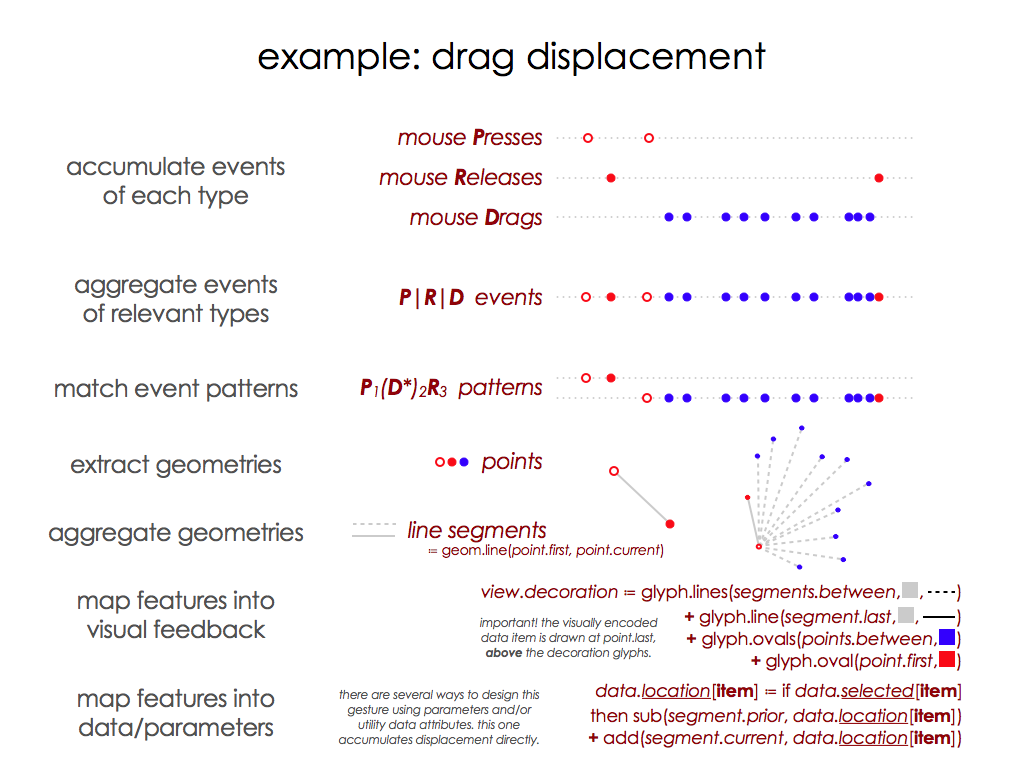

Interactive visual data editing can be modeled on top of existing user input mechanisms based on time sampling of event streams from physical input devices. The process of mapping events into data edits can be decomposed into a small sequence of relatively simple declarations. We have specified a semi-formal model that successfully breaks down event processing for data editing into eight steps: match conditions, accumulate events, aggregate events, match event patterns, extract geometries, aggregate geometries, map features into feedback, and map features into data/parameters.

We have applied the model to specify several example visualization gestures including both well-known cases (e.g., point selection) and previously unknown ones (e.g., half-plane selection):

Application of the model appears to be highly understandable, expressive, economical, and reusable. We anticipate this approach to data editing will carry over easily and with extreme effectiveness into visualization design practice. Beyond our own efforts on application to the digital humanities in this project, we are increasingly hopeful that the model could radically open up the interaction design space to study and broad application throughout the visualization community.